Why-So-Deep: Towards Boosting Previously Trained Models for Visual Place Recognition

RAL and ICRA IEEE Robotics and Automation Letters (RA-L) 2022.

Authors: M. Usman Maqbool Bhutta, Yuxiang Sun, Darwin Lau, Ming Liu

Introduction

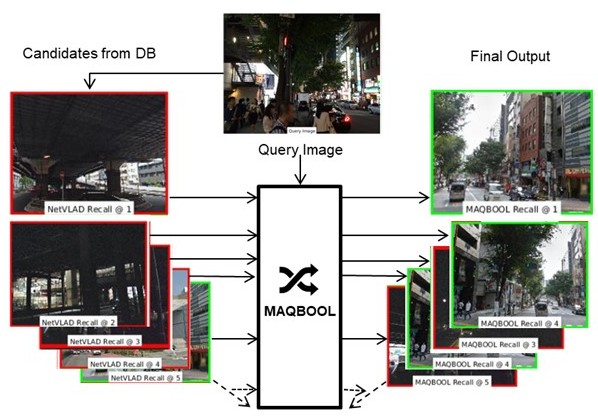

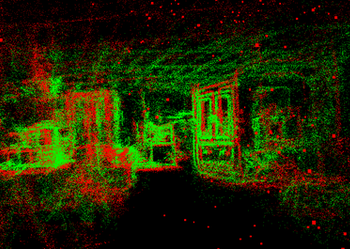

Abstract: Deep learning-based image retrieval techniques for the loop closure detection demonstrate satisfactory performance. However, it is still challenging to achieve high-level performance based on previously trained models in different geographical regions. This paper addresses the problem of their deployment with simultaneous localization and mapping (SLAM) systems in the new environment. The general baseline approach uses additional information, such as GPS, sequential keyframes tracking, and re-training the whole environment to enhance the recall rate. We propose a novel approach for improving image retrieval based on previously trained models. We present an intelligent method, MAQBOOL, to amplify the power of pre-trained models for better image recall and its application to real-time multiagent SLAM systems. We achieve comparable image retrieval results at a low descriptor dimension (512-D), compared to the high descriptor dimension (4096-D) of state-of-the-art methods. We use spatial information to improve the recall rate in image retrieval on pre-trained models.

Source Code

Please follow our :octocat: Github page.

MAQBOOL: Multiple AcuQitation of perceptiBle regiOns for priOr Learning

⭐️ If you like this repository, give it a star on GitHub! ⭐️

Video

BibTeX

@article{whysodeepBhutta22,

author={Bhutta, M. Usman Maqbool and Sun, Yuxiang and Lau, Darwin and Liu, Ming},

journal={IEEE Robotics and Automation Letters},

title={Why-So-Deep: Towards Boosting Previously Trained Models for Visual Place Recognition},

year={2022},

volume={7},

number={2},

pages={1824-1831},

doi={10.1109/LRA.2022.3142741}}

Precomputed Files

Results Files For The Comparison

Our Maqbool results dat files are available for comparison in :octocat: github repository. You can download and plot your TikZ (Latex), plot.ly etc. Furthermore, if you need help in plotting the results using Tikz and latex, please follow this 💡 little tutorial.

Download and Use

File name example is shown below: \(\overbrace{ \underbrace{vd16}_\text{CNN} \_ \underbrace{tokyoTM}_\text{pretrained on} \_to\_ \underbrace{tokyo247}_\text{tested on}\_ \underbrace{maqbool}_\text{method}\_ \underbrace{DT\_100}_\text{distance tree size}\_ \underbrace{512}_\text{feature dimension}. dat }^\text{file name}\)

Directory Tree

maqbool-data

├── models

│ ├── vd16_pitts30k_to_tokyoTM_4096_50_mdls.mat

│ ├── vd16_pitts30k_to_tokyoTM_512_50_mdls.mat

│ ├── vd16_tokyoTM_to_tokyoTM_4096_50_mdls.mat

│ └── vd16_tokyoTM_to_tokyoTM_512_50_mdls.mat

├── post_computed_files # for very fast results

│ ├── vd16_pitts30k_to_pitts30k_4096_50

│ ├── vd16_pitts30k_to_pitts30k_512_50

│ ├── vd16_pitts30k_to_tokyo247_4096_50

│ ├── vd16_pitts30k_to_tokyo247_512_50

│ ├── vd16_pitts30k_to_tokyoTM_4096_50_data.mat

│ ├── vd16_pitts30k_to_tokyoTM_512_50_data.mat

│ ├── vd16_tokyoTM_to_tokyo247_4096_50

│ ├── vd16_tokyoTM_to_tokyo247_512_50

│ ├── vd16_tokyoTM_to_tokyoTM_4096_50_data.mat

│ └── vd16_tokyoTM_to_tokyoTM_512_50_data.mat

└── pre_computed_files # precomputed landmarks (use to save time)

├── vd16_pitts30k_to_pitts30k_4096_50

├── vd16_pitts30k_to_pitts30k_512_50

├── vd16_pitts30k_to_tokyo247_4096_50

├── vd16_pitts30k_to_tokyo247_512_50

├── vd16_pitts30k_to_tokyoTM_4096_50

├── vd16_pitts30k_to_tokyoTM_512_50

├── vd16_tokyoTM_to_tokyo247_4096_50

├── vd16_tokyoTM_to_tokyo247_512_50

├── vd16_tokyoTM_to_tokyoTM_4096_50

└── vd16_tokyoTM_to_tokyoTM_512_50

I further explained the files names below in the table.

| Test-Dataset | Pre-trained Models | Feature Dimension | Configuration and File |

|---|---|---|---|

| Tokyo247 | TokyoTM NetVLAD: dbFeatFn, qFeatFn |

512-D | [config_wsd.m: f_dimension = 512, net_dataset = 'tokyoTM'; job_datasets = 'tokyo247';] File: vd16_tokyoTM_to_tokyoTM_512_mdls.mat |

| 4096-D | [config_wsd.m: f_dimension = 4096, net_dataset = 'tokyoTM'; job_datasets = 'tokyo247';] File: vd16_tokyoTM_to_tokyoTM_4096_mdls.mat |

||

| Pittsburg NetVLAD: dbFeatFn, qFeatFn |

512-D | [config_wsd.m: f_dimension = 512, net_dataset = 'pitts30k'; job_datasets = 'tokyo247';] File: vd16_pitts30k_to_tokyoTM_512_mdls.mat |

|

| 4096-D | [config_wsd.m: f_dimension = 4096, net_dataset = 'pitts30k'; job_datasets = 'tokyo247';] File: vd16_pitts30k_to_tokyoTM_4096_mdls.mat |

||

| Pittsburg | Pittsburg NetVLAD: dbFeatFn, qFeatFn |

512-D | [config_wsd.m: f_dimension = 512, net_dataset = 'pitts30k'; job_datasets = 'pitts30k';] File: vd16_pitts30k_to_pitts30k_512_mdls.mat |

| 4096-D | [config_wsd.m: f_dimension = 4096, net_dataset = 'pitts30k'; job_datasets = 'pitts30k';] File: vd16_pitts30k_to_pitts30k_4096_mdls.mat |